13 results

MAY 23, 2025 / Gemini

Announcing new features and models for the Gemini API, with the introduction of Gemini 2.5 Flash Preview with improved reasoning and efficiency, Gemini 2.5 Pro and Flash text-to-speech supporting multiple languages and speakers, and Gemini 2.5 Flash native audio dialog for conversational AI.

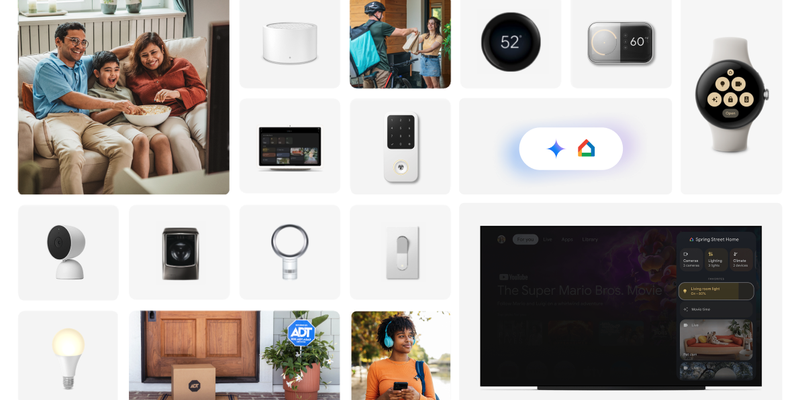

MAY 22, 2025 / Smart Home

Gemini intelligence is being integrated into Google Home APIs, offering developers access to over 750 million devices and enabling advanced features like AI-powered camera analysis and automated routines.

MAY 21, 2025 / Google AI Studio

Google AI Studio has been upgraded to enhance the developer experience, featuring native code generation with Gemini 2.5 Pro, agentic tools, and enhanced multimodal generation capabilities, plus new features like the Build tab, Live API, and improved tools for building sophisticated AI applications.

MAY 20, 2025 / AI Edge

Google AI Edge advancements, include new Gemma 3 models, broader model support, and features like on-device RAG and Function Calling to enhance on-device generative AI capabilities.

MAY 20, 2025 / Gemini

Google Gemini models offer several advantages when building AI agents, such as advanced reasoning, function calling, multimodality, and large context window capabilities. Open-source frameworks like LangGraph, CrewAI, LlamaIndex, and Composio can be used with Gemini for agent development.

MAY 20, 2025 / AI Edge

LiteRT has been improved to boost AI model performance and efficiency on mobile devices by effectively utilizing GPUs and NPUs, now requiring significantly less code, enabling simplified hardware accelerator selection, and more for optimal on-device performance.

MAY 20, 2025 / Gemini

Google Colab is launching a reimagined AI-first version at Google I/O, featuring an agentic collaborator powered by Gemini 2.5 Flash with iterative querying capabilities, an upgraded Data Science Agent, effortless code transformation, and flexible interaction methods, aiming to significantly improve coding workflows.

MAY 20, 2025 / Android

Top announcements from Google I/O 2025 focus on building across Google platforms and innovating with AI models from Google DeepMind, with key focus on new tools, APIs, and features designed to enhance developer productivity and create AI-powered experiences using Gemini, Android, Firebase, and web.

MAY 20, 2025 / Cloud

Updates to Google's agent technologies include the Agent Development Kit (ADK) with new Python and Java versions, an improved Agent Engine UI for management, and enhancements to the Agent2Agent (A2A) protocol for better agent communication and security.

MAY 20, 2025 / Gemini

Stitch, a new Google Labs experiment, uses AI to generate UI designs and frontend code from text prompts and images, aiming to streamline the design and development workflow, offering features like UI generation from natural language or images, rapid iteration, and seamless paste to Figma and front-end code.